The Limits of AI in College Football Analytics - Part 2

AI can only recognize connections already noted in its training data - for anything else, it's lost

If you missed Part 1, I highly recommend taking a few minutes to go back and read it.

The discussion at hand is the limits of AI’s ability to be useful in the college football analytics space, specifically in the predictive space. Covid is controversial, and Covid vaccination even more so, and as such, when married to college football it is a perfectly illustrative of my point.

Since I have a Master in Public Health and worked in hospitals during the pandemic, I was able to connect a clinical decision made by a head coach (in this case, Michigan’s Jim Harbaugh) with the outcome of a game vs. the Vegas line.

Absent a connection like this showing up in an AI model’s training data, the AI will never be able to make such a connection. And since the landscape is always evolving and new factors emerge seemingly daily, AI is unlikely to crowd out the best human analysts in college football. (That sound you hear is the collective deep exhalation of a roomful of analysts working for Nick Saban at Alabama).

Now then, let’s connect the wisdom of Leonard Cohen to published science, the expectations of sports betters, and the outcome on the field. And in the end, I’ll bring it all back to Virginia Tech.

Connecting the Dots

I mentioned the medically illiterate in last week’s article. Perhaps that seemed harsh. Here is what I was talking about. This study showed that fully vaccinated healthcare workers, whose average age at first dose was 47 years, experienced 90% vaccine efficacy at least seven days following the second dose. Fully vaccinated nursing home residents, whose average age at first dose was 84 years, saw 64% efficacy.

Both figures are in broad alignment with the findings in other studies examining short-term efficacy in the fully vaccinated. In contrast, both groups showed negative vaccine efficacy during the 14 days following the first dose — meaning here that those who got the vaccine were more likely to have a laboratory confirmed positive SARS-CoV-2 test than unvaccinated controls during that time period. For healthcare workers the vaccine was -104% efficacious, while for nursing home patients it was -40% efficacious.

Other studies, like this Lancet preprint, showed that the period of positive efficacy lasted around 6.5 months following the second dose.

After that, it went negative (defined here as the vaccinated being more likely than the unvaccinated to get a symptomatic Covid-19 infection). In late 2021, the wave of Omicron infections nationwide suggested that the third shot was having a result more similar to the first shot than the second, i.e., an initial period of negative efficacy. So, in strict scientific terms, assuming the entire Michigan squad had already received a two-shot mRNA series back in the spring, they were likely to be in a window of negative efficacy regardless of whether or not they all got a third shot. But, while that might have been the risk Harbaugh sought to minimize, it was not the biggest concern. Safety was, or should have been.

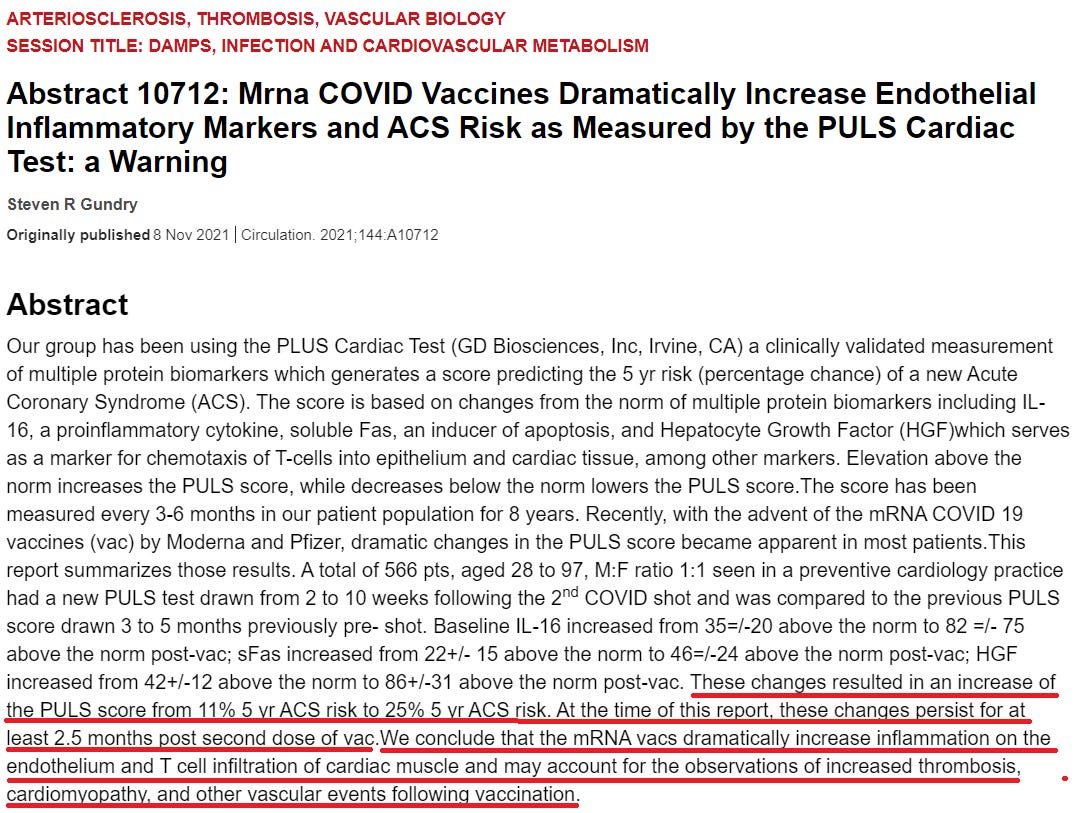

Particularly alarming at the time (and still to this day) were reports of major cardiovascular (blood clots and heart attacks) and neurological (strokes) injuries. One example was then Wake Forest, now Notre Dame, QB Sam Hartman, who had surgery to remove a blood clot, causing him to miss the start of the 2022 season. A study presented at the Scientific Sessions of the American Heart Association’s annual conference in the fall of 2021 found a near doubling of the five-year risk of Acute Coronary Syndrome in patients who received an mRNA vaccine.

It was clear from the original Pfizer trial data that any potential protective benefit against Covid-19 would be more than erased by increased cardiovascular deaths. In fact, the FDA’s Summary Basis for Regulatory Action document revealed higher all-cause mortality in the vaccine group (21) than the placebo group (17). Pfizer’s trial showed three cardiac deaths for every one Covid death prevented, a stat available in the literature from the beginning, but not noted in the mainstream press and very unlikely to find its way into AI training data, especially given the political data adjustments that are now en vogue in the AI world.

Unlike the U.S., many European countries keep comprehensive and detailed mortality data by vaccination status. A number of these countries noted year-over-year increases in all-cause mortality in 2021, with a study out of Sweden published to a pre-print server in 2021 providing a good example.

The study noted that 3,939 out of the 4,034,787 people who received both doses of the vaccine before August 5, 2021 died within two weeks of vaccination. From 2015–2019, during the same spring to summer period observed in the study, an average of 3,300 out of 10.6 million Swedes died every two weeks. That is 600 more vaccinated deaths in less than half the number of total people.

The CDC had, from the beginning, said that mild to moderate side effects could occur following vaccination, but one could dig through media reports all day and still find no mention of severe adverse events. That information was all out there, though, hiding in the medical literature.

So, on the side effects front, considering the mass vaccination of Michigan players was to take place just days before the game, my public health training told me that the best case scenario was that the team would play sluggishly. The CDC’s VAERS database already contained tens of thousands of injury reports, and a 2022 FOIA request brought even more clarity via the V-Safe database, which was populated by vaccinees via a phone-based app.

Thirty-three percent of users reported an adverse health impact of some kind:

12.1% of people participating in V-Safe reported a mild adverse impact

13.3% reported a moderate adverse impact

7.7% reported an adverse impact requiring medical care

Had this dataset been publicly available in late 2021, I would have been even more bearish on the Wolverines.

It’s not like the actual outcome of the game was unimaginable. I ran this game through my latest multiple regression model (which, I should note, is distinct from machine learning based artificial intelligence) and the prediction came back 40-12, Georgia.

Rather, the human ability to identify and apply a connection that AI could not would put the human at a major advantage when it came to adjusting predictions. The AI would factor in the base x% chance that Michigan pulls the upset, whereas the human would realize that at this point an upset was basically impossible. The human could then adjust the expected point spread range accordingly.

Virginia Tech and Asymmetric Information

No dataset has ever existed, or likely ever will exist, that can make the connections I have drawn above. For AI to do this, it would need to ingest something like a study evaluating the impact of vaccinating an entire football team with a novel biologic days before a big game. Obviously, at the time there was nothing to evaluate - Michigan was the first known team to opt for this course of action. Without a robust collection of scientific data or significant commentary in the media or an online space, one will have nothing on which to train an AI model. AI can only look backward and recognize connections already made in the ingested data, then apply them moving forward.

The end result, as far as I was concerned, was the best case scenario for Michigan. They played sluggishly and got trounced.

As an analyst, I am always on the lookout for variables that will supersede models. Examples like the one presented above are rare. More common are X-factor variables, the most common of which is an injury to an important player, e.g., the starting quarterback. In a previous model that looked only at Virginia Tech data, I actually included a QB health field. The problem was that I had to manually enter the data, quantifying my own understanding based on public reports. As a result it was biased and often inaccurate (undisclosed injuries were a gaping hole).

Player eligibility/disciplinary actions are other factors that can have a major impact on the expected outcome of a game. My ears always perk up when I hear Chris Coleman of Techsideline begin a sentence with the words, “We’ve heard…”

Again, intuitively, fans get all this. Hence the long-running joke on message boards and Twitter about sauces. For this reason, when I share a modelled prediction for a game outcome, I also offer my own thoughts. I often agree, at least directionally, with the model, but when I disagree, it is usually because of a hard-to-quantify factor or a Leonard Cohen variable.

Like any dealer he was watching for the card that is so high and wild

He'll never need to deal another

He was just some Joseph looking for a manger

Brent Pry has stated on the record that he is not much into analytics. I’d love it if one of the beat reporters asked him why, and how defines analytics. If he means it in pure science terms, I’d agree with him. As the maxim goes:

All models are wrong. Some models are useful.

I’m in the camp that sees analytics as both an art and a science. The science part can get you in the ballpark in regard to most any question. The art part is what takes you the last mile. Unless and until AI takes up art, its utility in the college football analytics space will remain limited.